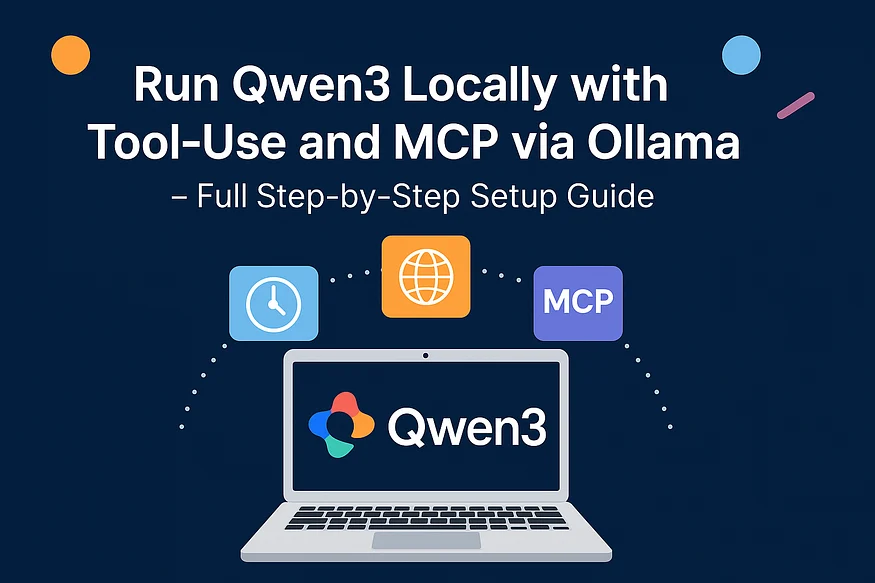

Learn how to install and use Qwen3 with the Qwen-Agent framework, enable MCP tool-use like time and fetch, and run everything locally using Ollama

Introduction

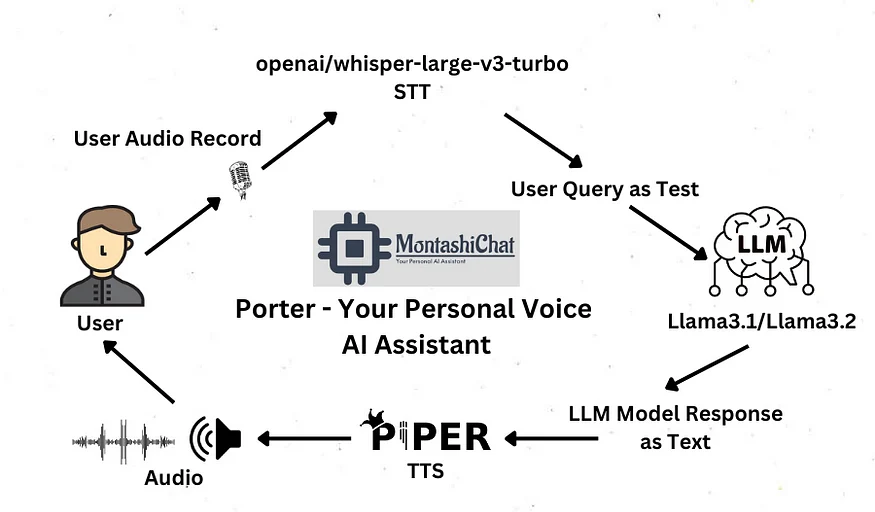

Looking to build your own AI assistant that works offline and supports real-time web access, time queries, and code execution? Qwen3 is Alibaba’s latest open-source large language model (LLM), delivering state-of-the-art performance, tool-use support, and modular design for advanced AI assistant capabilities. . With Ollama for easy local model serving and the Qwen-Agent framework for agent orchestration, you can run a fully functional LLM-powered assistant on your machine — no external API keys, cloud billing, or latency issues.

In this comprehensive guide, you’ll learn how to run Qwen3 locally using Ollama, integrate it with Qwen-Agent, and activate Model Context Protocol (MCP) tools like time and fetch. Whether you’re a developer, researcher, or AI enthusiast, this tutorial will help you create a privacy-friendly, smart assistant that can retrieve real-time data, interpret Python code, and adapt to complex workflows — all running natively on your hardware.

In this step-by-step guide, you’ll learn how to:

- ✅ Run Qwen3 LLM locally using Ollama

- ✅ Enable MCP (Model Context Protocol) with tools like fetch and time

- ✅ Use Qwen-Agent to build your private smart assistant — no cloud API keys needed!

Why Choose Qwen3 + Qwen-Agent + MCP + Ollama?

- Qwen3: Powerful open-source LLM optimized for tool-use and local deployment.

- Ollama: Easiest way to run models locally via

ollama serve. - Qwen-Agent: A multi-functional agent framework built for modular tasks.

- MCP (Model Context Protocol): Enables Qwen3 to interact with local tools like

code interpreter,fetch, andtime.

With this setup, your AI assistant can:

- Retrieve real-time web data

- Execute Python code

- Access current local time

- Operate offline with full privacy

Setting Up Qwen3 with mcp and Ollama Locally

Step 1: Install Ollama and Pull Qwen3 Model

🖥️ Run these commands on your local terminal (Linux/macOS):

# Install Ollama

curl -fsSL https://ollama.com/install.sh | sh

# Start the Ollama server

ollama serveThen pull the Qwen3 model:

ollama pull qwen3This sets up Qwen3 at http://localhost:11434/v1, ready for local use through the Ollama API.

Step 2: Clone and Install Qwen-Agent with MCP Support

Next, clone the official Qwen-Agent repository and install it with the required extras for GUI, retrieval-augmented generation (RAG), code interpretation, and MCP support. Run the following commands:

# Clone the repo

git clone https://github.com/QwenLM/Qwen-Agent.git

# Install with all extras

pip install -e ./Qwen-Agent"[gui, rag, code_interpreter, mcp]"The -e flag ensures the package is installed in editable mode, making it easier to experiment with its internals if needed. After installation, you can write a Python script to connect the assistant to Ollama and enable tool use.

Step 3: Prepare Your Python Script

Here’s the complete code to run a tool-using assistant with MCP + Ollama:

from qwen_agent.agents import Assistant

# Step 1: Configure your local Qwen3 model (served via Ollama)

llm_cfg = {

'model': 'qwen3',

'model_server': 'http://localhost:11434/v1', # Ollama API

'api_key': 'EMPTY',

}

# Step 2: Define your tools (MCP + code interpreter)

tools = [

{'mcpServers': {

'time': {

'command': 'uvx',

'args': ['mcp-server-time', '--local-timezone=Asia/Shanghai']

},

'fetch': {

'command': 'uvx',

'args': ['mcp-server-fetch']

}

}},

'code_interpreter',

]

# Step 3: Initialize Qwen-Agent Assistant

bot = Assistant(llm=llm_cfg, function_list=tools)

# Step 4: Send a user message with a URL

messages = [{'role': 'user', 'content': 'https://qwenlm.github.io/blog/ Introduce the latest developments of Qwen'}]

# Step 5: Run the assistant and print results

for responses in bot.run(messages=messages):

pass

print(responses)This script initializes the Qwen3 model using the Ollama backend and registers two MCP tools (time and fetch) along with the built-in code_interpreter. After evaluating tools or code as needed, the assistant then processes a user message and returns the response.

Bonus: Install MCP Tools (uvx, mcp-server-fetch, mcp-server-time)

Install the MCP servers via UVX (Universal Virtual Executor). You can follow their setup guides here:

Run the tools in parallel to make them callable via the MCP protocol.

Output

After executing the script, you’ll get real-time, tool-enhanced responses like this:

Assistant: Here’s the summary of the latest Qwen developments from the blog…

Download or Pull Qwen3: Ollama

Official Qwen3 Huggingface: Link

Conclusion

Combining Qwen3 with Qwen-Agent, MCP, and Ollama results in a competent local AI assistant that does not depend on any external cloud service. You can run multi-turn dialogues, enable real-time information retrieval, and execute Python code — all within the infrastructure. This makes it an ideal setup for developers, researchers, and product teams interested in building intelligent agents with privacy, flexibility, and extensibility in mind. As Qwen3 continues to evolve, we can expect more robust support for complex tasks and seamless integration with custom tools, paving the way for truly autonomous local AI agents.

Leave your feedback, comments !! 👏👏

If you enjoyed this article and would like to support my work, consider buying me a coffee here: Ko-fi☕

Happy coding! 🎉

👨🏾💻 GitHub ⭐️ | 👔 LinkedIn | 📝 Medium | ☕️ Ko-fi

Thank you for your time in reading this post!

Make sure to leave your feedback and comments. See you in the next blog, stay tuned 📢

Md Monsur Ali is a tech writer and researcher specializing in AI, LLMs, and automation. He shares tutorials, reviews, and real-world insights on cutting-edge technology to help developers and tech enthusiasts stay ahead.